How AI Understands Language: Simple NLP Guide for Beginners

Artificial Intelligence can read, write, answer questions, and hold conversations — but how does it understand language?

This lesson explains Natural Language Processing (NLP) in simple terms.

You will learn:

• What NLP is

• How AI understands sentences

• What tokens are

• Why context matters

• Real life NLP examples

What is NLP? (Simple Definition)

NLP stands for Natural Language Processing.

It is the method AI uses to understand and generate human language.

Humans understand meaning.

AI finds patterns in text and predicts the most likely next words.

Example:

If you type “The sky is”, AI predicts “blue” because it has seen that pattern many times.

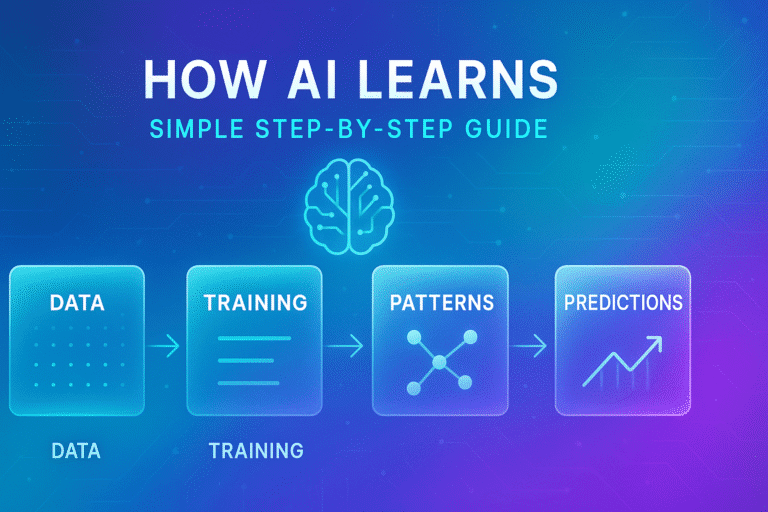

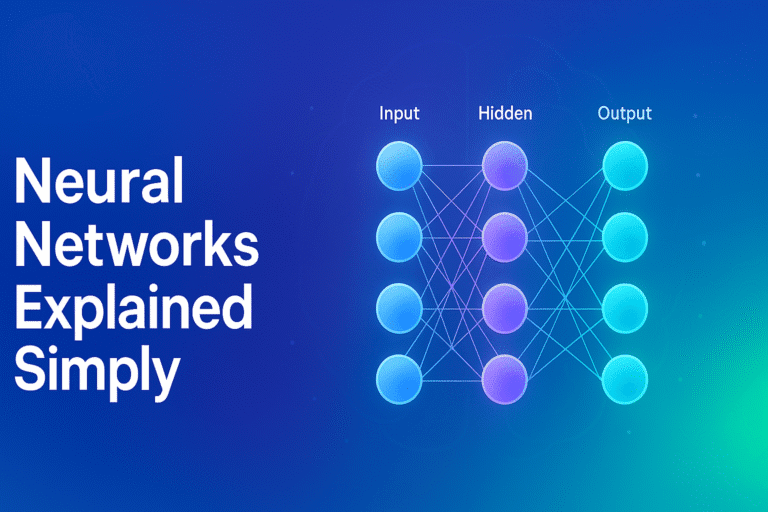

How AI Understands Language (Step-By-Step)

- Breaks text into tokens (small text chunks)

- Looks at patterns between tokens

- Uses learned knowledge to predict meaning

- Generates the most likely response

AI doesn’t “feel” the meaning — it calculates relationships.

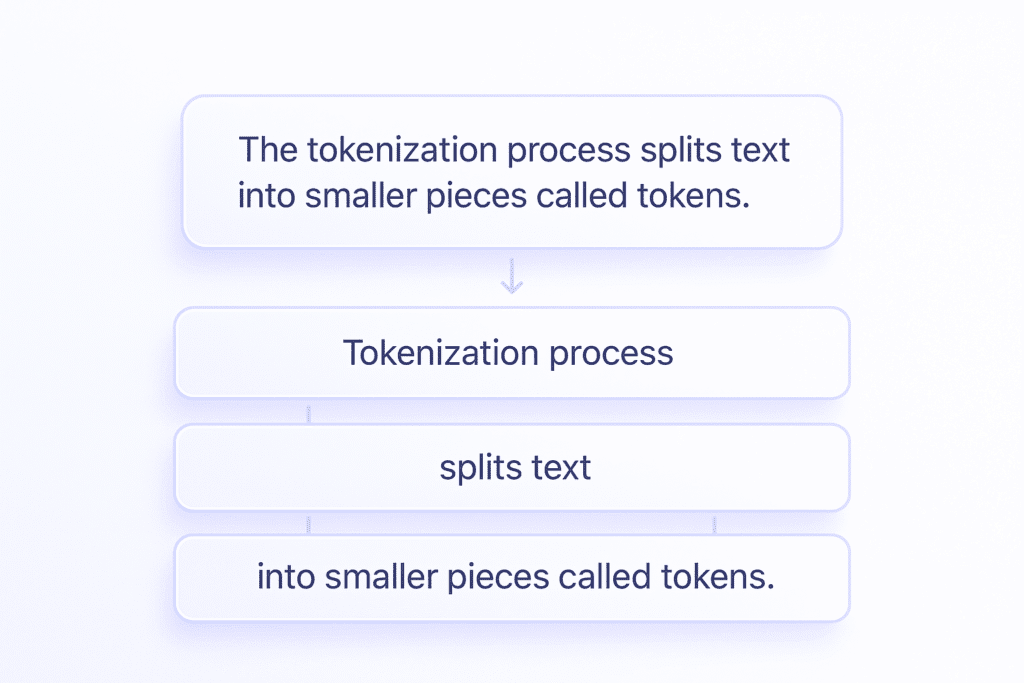

What Are Tokens?

Tokens = small pieces of text.

Example:

“Learning AI is fun” might become:

• Learning

• AI

• is

• fun

AI uses tokens to learn language structure.

What Is Context in Language? (Simple Explanation)

Context means the words and information around a word or sentence that help determine its meaning.

Humans use context naturally in communication.

AI uses context mathematically — by analyzing surrounding tokens.

Example:

- “I went to the bank to deposit money.”

→ bank = financial place - “We sat by the bank to watch the river.”

→ bank = river edge

The same word changes meaning depending on context.

Context helps AI understand:

• Which meaning fits

• Sentence purpose

• Tone and intent

Context = the surrounding clues that give meaning.

Why Context Matters

AI doesn’t just read words — it studies context.

“Bank” in:

• “river bank”

• “money bank”

Same word, different meaning.

AI looks at nearby words to understand.

Real Examples of NLP

• ChatGPT

• Google search autocompletion

• Translate apps

• Voice assistants (Siri, Alexa)

• Grammar correction tools

Mini Exercise

Write one sentence.

Then write a different sentence using the same keyword in a different meaning.

This helps you understand context like AI learns it.

✅ 4 Image Prompts

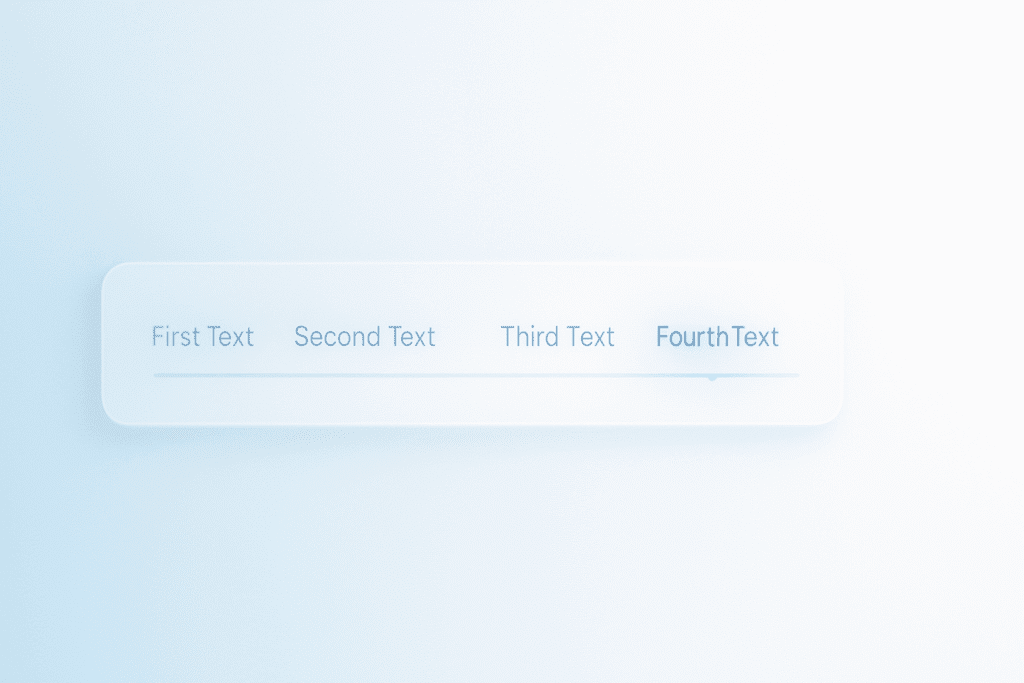

- Modern UI graphic: sentence breaking into tokens, soft white background, pastel blue, subtle glass style, thin font, 16:9

- Floating language bubbles turning into digital tokens, soft edges, premium Apple-style, 16:9

- Two meanings of same word with context circles, clean educational style, 16:9

- Chat interface style showing prediction of next word, minimal UI, white + blue tones, 16:9

✅ Tags (6)

• natural language processing

• NLP explained

• how AI understands text

• beginner AI lessons

• AI language learning

• tokens and language models

✅ Meta Description

Learn how AI understands language using Natural Language Processing (NLP). Simple explanation of tokens, context, and language patterns with real examples for beginners.

✅ AI Lesson 5: What Are Tokens & Context Windows? (Simple Guide)

Title

Tokens & Context Windows Explained Simply (AI Language Learning Guide)

Article

To understand how AI reads and writes text, you must understand tokens and context windows.

What Are Tokens?

Tokens are small pieces of text AI uses to process language.

1 token ≈ 1 short word or piece of a word.

Examples:

“Information” → “Infor” + “mation”

“ChatGPT learns fast” → 3 tokens

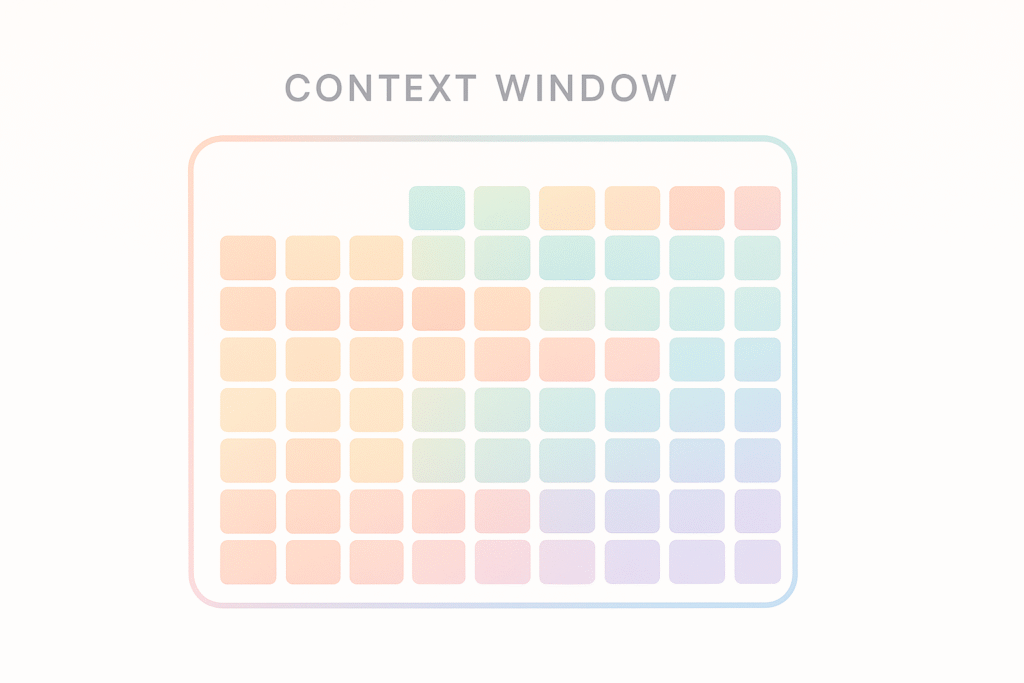

What is a Context Window?

The context window is how much information AI can remember at one time.

Like short-term memory.

If the context window = 8,000 tokens,

AI can read & remember 8,000 tokens before forgetting older ones.

Why Tokens Matter

Tokens affect:

• How much AI can read at once

• How long your conversation can be

• Cost of processing (more tokens = more compute)

• The quality of AI’s answers

Why Context Window Matters

Bigger context window = AI can follow longer:

• Conversations

• Documents

• Stories

• Code files

Small context = forgets earlier details.

Large context = stronger memory.

Real Examples

Small context model:

Forgets what you said earlier.

Large context model:

Can read entire books or projects at once.

Mini Exercise

Write a 10-word sentence.

Then split it into tokens manually.

This helps you understand how AI sees text.